Oakley Progress Report

I've made quite a bit of progress since I last blogged about Oakley. I might actually be able to release an alpha version in the next decade!

Better Numbers

- No need to specify the type of number anymore, e.g. you can now write

12rather than12b. - Binary literals, e.g.

0b00111011. - Numeric separators to make it easier to read large numbers, e.g.

12_345. Supported for decimal, hex and binary numbers.

Error Reporting

The compiler now actually reports some errors, as opposed to crashing hideously, which is nice. It produces a hopefully useful error message along with the piece of code causing the problem:

Error E0011: Hex literals must have an even number of digits.

In System.Spectrum.Screen.oakley at line 26, column 19.

Plot(coords.X, 0x102);

^^^^^

Type Checking

There is now some type checking in place rather than relying on z88dk giving me an error when compiling the resultant C code. If you try to use an incompatible type you'll be told, along with a list of allowed types where possible:

Error E0019: Cannot resolve method call Plot(System.Byte, const System.String). Candidates are:

Plot(System.Byte, System.Byte) (in type Screen)

In System.Spectrum.Screen.oakley at line 26, column 4.

Plot(coords.X, "Invalid");

^^^^^^^^^^^^^^^^^^^^^^^^^

Overloads

Methods, operators and constructors can now be overloaded, i.e. you can have multiple methods/operators/constructors on the same type with the same name provided they have different parameters.

Type Classes

Type classes have been added. Somewhere between Haskell's type classes and Scala's traits they give a way to share code between types and a form of polymorphism. For example you could define an Equatable type class that represents checking if two types are equal:

typeclass Equatable[T]

{

public Boolean (==)(T other);

public Boolean (!=)(T other)

{

return !(this == other);

}

}

Note how == has no implementation! This means you must implement this method when you implement the type class:

type SomeType : Equatable[SomeType]

{

public Byte Id;

public constructor(Byte id)

{

Id = id;

}

public Boolean (==)(SomeType other)

{

return Id == other.Id;

}

}

Your type now gains the != operator for free as it was defined in the type class:

SomeType x = SomeType(4);

SomeType y = SomeType(5);

Console.WriteLine(x != y);

You can also use type classes polymorphically, i.e. write code that refers to the type class rather than the type itself. For example, consider the following type class and types:

typeclass Identifiable

{

public Byte GetId();

}

type AnotherType : Identifiable

{

public Byte Id;

public Byte GetId()

{

return Id;

}

}

type YetAnotherType : Identifiable

{

public Byte GetId()

{

return 125;

}

}

Both implement Identifiable but have very different implementations. We could then write a method that uses the type class:

public static void Write(Identifiable hasId)

{

Console.WriteLine(hasId.GetId());

}

Because both AnotherType and YetAnotherType implement Identifiable they can both be passed to this method:

AnotherType a = AnotherType();

Write(a);

YetAnotherType y = YetAnotherType();

Write(y);

Generic Constraints

Having type classes make generic constraints possible. These enable us to constrain a generic parameter so that it must implement one or more type classes:

type UsesIds[T]

where T : Identifiable

{

public static void Write(T hasId)

{

Console.WriteLine(hasId.GetId());

}

}

The above code says that we can use any type we like for T provided it implements Identifiable. This then means we can use the GetId method on T inside the type as we can be sure it has one. Trying to use a type that isn't Identifiable won't work:

UsesIds[YetAnotherType] x = UsesIds[YetAnotherType](); // Compiles.

UsesIds[Byte] y = UsesIds[Byte](); // Does not compile.

A type can be constrained with as many type classes as you like. It is also possible to constrain by a set of types, forcing the type parameter to be one of the specified types. They standard library Array type uses this to specify that the length of an array can only be a Byte or a Word:

type Array[T, TLength]

where TLength : Byte | Word

{

...

Default Generic Parameters

Defaults can now be specified for type parameters, which will be used if they are not specified. Array uses this to default TLength as most of the time your arrays will be fairly small:

type Array[T, TLength = Byte]

You then do not need to specify TLength most of the time, only needing to for large arrays:

Array[SomeType] array = Array[SomeType](5);

Array[SomeType, Word] largeArray = Array[SomeType, Word](500);

TThis

Every type and type class in Oakley has a built in generic type parameter, TThis, which is the current type. On the surface that doesn't sound that useful - don't you always know what type you are? Well not if you're a type class. When implemented by a type TThis will be swapped out with the type itself. Confusing I know. Let's look how we might define Equatable using TThis:

typeclass Equatable

{

public Boolean (==)(TThis other);

...

Our implementation is similar to before except we no longer need the type parameter on Equatable:

type SomeType : Equatable

{

public Boolean (==)(SomeType other)

...

}

Could we not have done this using Equatable itself?

typeclass Equatable

{

public Boolean (==)(Equatable other);

...

No, because our implementation in SomeType would then have to be:

type SomeType : Equatable

{

public Boolean (==)(Equatable other)

...

}

Which is very different - it is saying that == will take anything that implements Equatable rather than specifically SomeType.

The implementation of Equatable in the standard library uses TThis with default generic parameters:

typeclass Equatable[T = TThis]

{

public Boolean (==)(T other);

...

Meaning if you don't specify a type parameter it will use the current type, otherwise it will use the type you specified.

Enums

Enums are strongly typed numbers. For example the standard library has an enum for the ZX Spectrum colours:

enum Colour

{

Black = 0;

Blue = 1;

Red = 2;

Magenta = 3;

Green = 4;

Cyan = 5;

Yellow = 6;

White = 7;

}

You can use these as you would any other type, e.g.:

public static void SetBorder(Colour colour)

{

...

Why would you use an enum rather than a number? First reason is that you cannot use a number you haven't defined in the enum. If you used a number then someone could pass the number 8 to SetBorder above which is invalid.

Second reason is readability - it's much easier to work out what code is doing with enums as they have sensible names. Whilst you might remember all the numbers for the Spectrum colours other values might be harder. Which of the following two lines of code is easiest to understand?

Hardware.SetRegister(7, 1);

Hardware.SetRegister(Register.TurboControl, Turbo.Double);

Third reason is type safety. It's easy to pass the wrong number to something, it's impossible to pass the wrong enum type because the compiler prevents it:

Hardware.SetRegister(7, 1); // Compiles.

Hardware.SetRegister(1, 7); // Compiles.

Hardware.SetRegister(Register.TurboControl, Turbo.Double); // Compiles.

Hardware.SetRegister(Turbo.Double, Register.TurboControl); // Does not compile.

Enums default to use Bytes for the number they represent but Words can also be used:

enum BigEnum[Word]

{

LargeValue = 2345;

LargerValue = 3456;

}

Enums can even have operators and methods:

enum Turbo

{

Normal = 0;

Double = 1;

Quadruple = 2;

public static Turbo Halve(Turbo turbo)

{

if (turbo == Turbo.Normal)

{

return Turbo.Normal;

}

return Turbo(turbo.GetValue() / 2);

}

}

Wondering where the GetValue method above comes from? Enums compile into normal Oakley types that implement the Enum type class, and this type class defines GetValue.

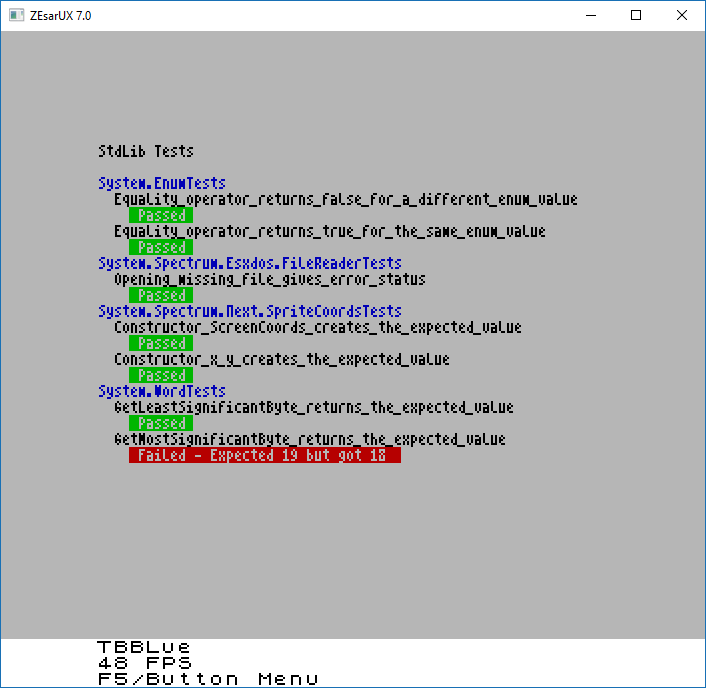

Testing

Automated tests are a good thing. So good that Oakley has a special syntax to make it easier for you to write them:

tests WordTests

{

test GetLeastSignificantByte_returns_the_expected_value

{

Word word = 0x1234;

Byte lsb = word.GetLeastSignificantByte();

Assert.AreEqual(lsb, 0x34);

}

test GetMostSignificantByte_returns_the_expected_value

{

Word word = 0x1234;

Byte msb = word.GetMostSignificantByte();

Assert.AreEqual(msb, 0x13);

}

}

The standard library Assert type contains several checks to test your values are correct. These tests can then be built into an output file that you can run on the Next, or the emulator of your choice:

Did you notice the mistake in the test above?

Currently the test runner just outputs to the screen but eventually (i.e. after the alpha release) it will write output to disk using ESXDOS. This will allow us to combine the output of multiple test runs (because all your tests might not fit into memory) and do more complex things, such as take a screen shot of the emulator on the PC and compare them with a saved screen to test display code, or combine the test run with trace output from the emulator to perform code coverage analysis.

Various Other Things

Addresstype to add a bit of type safety around peeking and poking.- Got rid of the

entrypointkeyword that indicated where they program starts, replacing with aProgramtype class that has aRunmethod. - Improvements to the generated code to use less pointers. Makes things run a bit faster.

- I think the the second demo will now run on the real hardware, however I still don't have any real hardware to check. :(

Alpha Release

Soon... There are still a few more boring things I need to do before I can release a compiler:

- The standard library needs a bit more work, although I am hoping other people using an alpha version will help to define the library a bit better. I might just release as-is with my ideas for the library and see what people think.

- A few bugs to fix.

- Some more errors to check for.

- Documentation. I have started but lots more to do.

- Command line options - currently I have the options hard coded into the program...

The memory model still needs a bit of work too, however I think I'm going to release an alpha before that is done. With memory I want to avoid any dynamic memory allocation and have everything allocated up front. Whilst this might sound restrictive having 48k to work with is restrictive anyways, meaning you really should be thinking about memory up front, so I might as well force you to do so. :) And it will make things much easier for me when I write the straight-to-assembly version of the compiler. (Which won't be anytime soon admittedly.) The up-front-allocation will also involve some compile time analysis that will enable lots of clever optimisations as a nice side effect, such as running Oakley code and even tests at compile time. But all of that is the subject for another long and rambling blog post some other day...